The Artificial Intelligence and Data Act (AIDA) is a large part of the Canadian government’s response to the wave of AI technologies that blew up seemingly out of nowhere in recent years. Ryan Black, DLA Piper’s Partner and formerly lead Clinic Adjunct for the Centre for Business Law at Allard, posted a detailed comparison between the initially proposed AIDA and its newest rendition. The post highlights in blue the text that is newly added and in red for removed texts.

Here is the link: Redline of proposed AIDA amendments

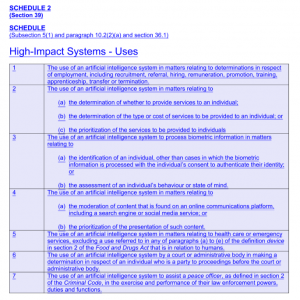

Although the bill has not yet passed into law, it is still very interesting to follow how the legislators are changing and evolving their language to match current legal understandings and needs. The point of interest for me is how the Act defines “high-impact systems”.

![]()

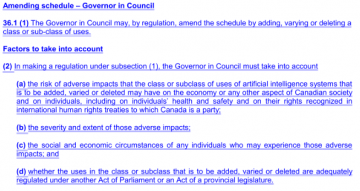

When I had gone through the Act in detail previously, this definition was not yet clearly set out. Instead, it was to be decided by the Governor in Council with a set of non-exhaustive factors such as “The severity of potential harms; The scale of use; Imbalances of economic or social circumstances, or age of impacted persons; and the degree to which the risks are adequately regulated under another law,” and so on.

However, in this current version, AIDA clearly lists out which systems would fall under the “high-impact systems” that it intends to regulate as well as allowing the Governor in Council to potentially add more in the future.

These changes are particularly useful because, with an increasing amount of lawsuits similar to the Andersen v Stability AI Ltd lawsuit, where artists are suing various companies for using their artwork to train AI art generators without permission, the AIDA may be a way to step in and help Canadian creators in similar situations.

Many in the Canadian art industry are already feeling the adverse economic impacts certain AI programs such as DALLE and Midjourney are causing. Although these systems may not currently fall under the AIDA’s definition of a “high-impact system”, real social and economic pressure may push the Governor in Council to add additional classes or subclasses of AI systems to address the issue.

Copyright & Social Media

Copyright & Social Media Communications Law

Communications Law